Exploring the realm of AI-driven image synthesis has been a remarkable journey, witnessing a reduction in accessibility barriers and technical constraints.

Yet, even with these advancements, AI image generators still struggle to consistently perform, facing gradual progress and occasional frustrations. We have already seen how Playground ai VS Midjourney competition is still on.

However, there is a glimmer of hope in the form of Meta’s groundbreaking creation: Meta Cm3Leon, an innovative AI model that promises to revolutionize text-to-image generation.

What Is Meta Cm3Leon?

Meta has recently introduced Cm3Leon, an AI model that sets new benchmarks for text-to-image synthesis. One of its standout features lies in its ability to generate descriptive captions for images, which is groundbreaking and poised to pave the way for more sophisticated image-understanding models, as Meta asserts.

The brilliance of Cm3Leon shines through its capacity to produce coherent imagery closely aligned with the input prompts. Meta believes that this remarkable performance signifies a substantial step towards achieving higher-fidelity image generation and comprehension.

How does Meta Cm3Leon differ from other models?

In stark contrast to prevailing image generation tools like Playground AI, leonardo AI, Midjourney etc along with models like DALL-E 2, Google’s Imagen, and Stable Diffusion, which employ computationally intensive diffusion processes; Meta Cm3Leon operates as a transformer model, utilizing the power of “attention” to weigh the relevance of input data, be it text or images. This unique approach, coupled with the transformer’s architectural intricacies, enhances model training speed and parallelizability, making it more feasible to train larger transformers with reasonable compute requirements.

Meta claims that Cm3Leon surpasses most transformers in terms of efficiency, demanding only a fraction of the compute power and a smaller training dataset compared to previous transformer-based methods.

How is Cm3Leon trained?

To train Cm3Leon, Meta harnessed a massive dataset comprising millions of licensed images from Shutterstock. The most potent iteration of Cm3Leon, among several developed by Meta, boasts an astounding 7 billion parameters, more than double the parameters present in DALL-E 2. Parameters are crucial elements that a model learns from training data, defining its prowess in solving specific problems, such as image and text generation.

One critical factor contributing to Meta Cm3Leon’s exceptional performance is a technique known as supervised fine-tuning (SFT). This approach, which has already proven effective in training text-generating models, has now been successfully adapted to the image domain. SFT has not only improved Cm3Leon’s image generation capabilities but also enhanced its proficiency in generating image captions, responding to questions about images, and executing edits based on textual instructions.

The Real Power of Meta Cm3Leon

Cm3Leon exhibits a remarkable aptitude for comprehending instructions to edit existing images. For instance, when prompted to “Generate a high-quality image of a room with a sink and a mirror, with a bottle at specific image pixle location (for example 200, 155),” the model can create a visually coherent and contextually relevant image, complete with all specified elements. Conversely, DALL-E 2 often falters in grasping such nuances, occasionally omitting specified objects altogether.

Unlike DALL-E 2, Meta Cm3Leon demonstrates versatility in responding to a wide range of prompts, generating both short and long captions and adeptly answering questions about specific images. Impressively, the model outperforms specialized image captioning models, even with less textual data in its training set, according to Meta’s claims.

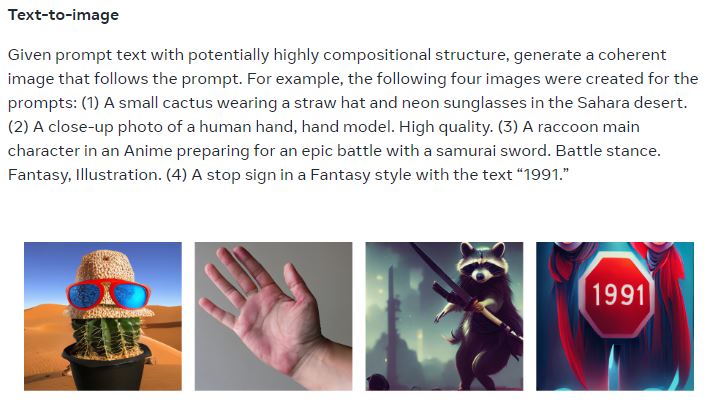

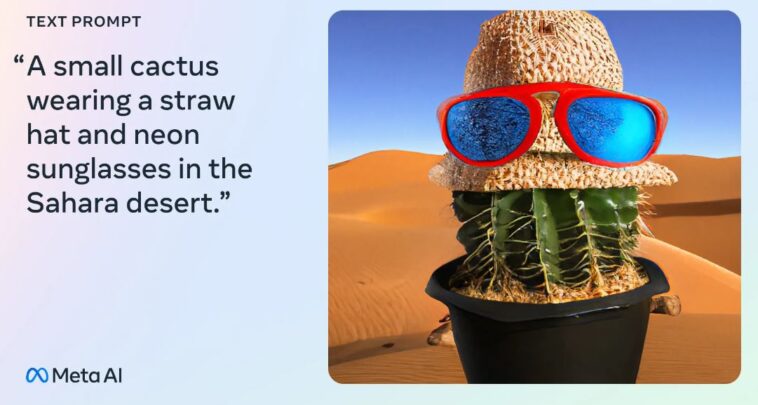

Meta’s official website ai.Meta.com shows the power of Cm3Leon when it comes to

- Text to Image generation

- Text guided image editing

- Text Tasking

- Object to Object Generation

- Structure guided image editing and much more

Addressing concerns

However, concerns regarding bias remain pervasive in the generative AI landscape. Prior models, including DALL-E 2, have been criticized for reinforcing societal biases. While Meta acknowledges this issue, they have yet to address potential biases in Cm3Leon, stating that the model may reflect any biases present in the training data.

Conclusion

As the AI industry progresses, generative models like Meta Cm3Leon continue to evolve and become increasingly sophisticated. Although challenges like bias and ethical aspects require attention and resolution, Meta emphasizes the importance of transparency in driving progress.

As of now, Meta has not disclosed specific plans for Cm3Leon’s release, owing to the controversies surrounding open-source art generators. Therefore, the future of this groundbreaking technology remains uncertain, awaiting further developments and insights in the AI landscape.

How To Use Meta Cm3Leon ?

By the time we are writing this article, Meta has not launched it for public usage to experiment. So come back and see this space, and the moment we see an update in this regard, we will simply put a step by step guide to walk you through the process.

Meanwhile, if you are really interested to know other FREE AI text-to-image tools; simply click our AI tools section. Remember to learn everything, no matter if you use it or not. Happy learning !!

GIPHY App Key not set. Please check settings